Sign up for daily news updates from CleanTechnica on email. Or follow us on Google News!

I wrote an article the other day titled “Tesla Proved Many People Wrong For Years — Is This Time The Same, Or Different?” The core points of the article were these:

- Many people said Tesla couldn’t do what it ended up doing at many stages of the company’s development.

- I believed Tesla could do those things.

- Now, I’m having a hard time believing in Tesla’s approach to robotaxis and its plan to roll them out within a few years (even though I argued the opposite a decade ago, and even up to 5 years ago).

- So, I’m wondering, have I become the skeptic who will be proven wrong, or am I right to think it’s different this time and Tesla won’t achieve its robotaxi targets (again)?

Down in the comments, a reader, Doug Sanden, wrote the following:

“FSD training — log linear?

-

- increasing amount of data needed for linear progress, or decreasing progress for linear increses in data:

video ‘Has Generative AI Already Peaked?’ – Computerphile - diminishing returns?

- he’s describing the results from this paper:

- ‘No “Zero-Shot” Without Exponential Data: Pretraining Concept Frequency Determines Multimodal Model Performance’, Udandarao et al, 2024

- inefficient log-linear scaling trend

- exponential need for training data”

- increasing amount of data needed for linear progress, or decreasing progress for linear increses in data:

I looked up that video and watched it. Here it is, and I highly recommend giving it a watch:

Again, don’t skip the video, but I’ll try to summarize the key points again here:

- Generative AI is amazing and doing great things.

- Because of what it can do, it seems like magic to many and also gives people the belief that adding more and more data, or building bigger and bigger models — or doing both — will make figuring out almost anything possible and will enable more and more amazing technological solutions.

- However, in simple terms, there are diminishing returns when the desired output or desired product gets more complicated, more nuanced.

- Also, as you attempt to achieve those much more difficult goals, you end up pouring an enormous amount of money into data collection and model processing, and you can end up down a deep money pit essentially chasing unicorns and butterfly farts.

As it relates to Tesla as a company and Tesla Full Self Driving (FSD) as a product, this is basically just a better explanation of something I’ve been concerned about for a while. I do think Tesla risks chasing unicorns diminishing returns with its approach to FSD, which I think could burn an enormous amount of cash without Tesla being able to roll out robotaxis. I also think this relates to the “seesaw problem” I’ve discussed a few times — that’s just the effect of trying to solve very nuanced, complicated problems with the hardware and software approach Tesla is using.

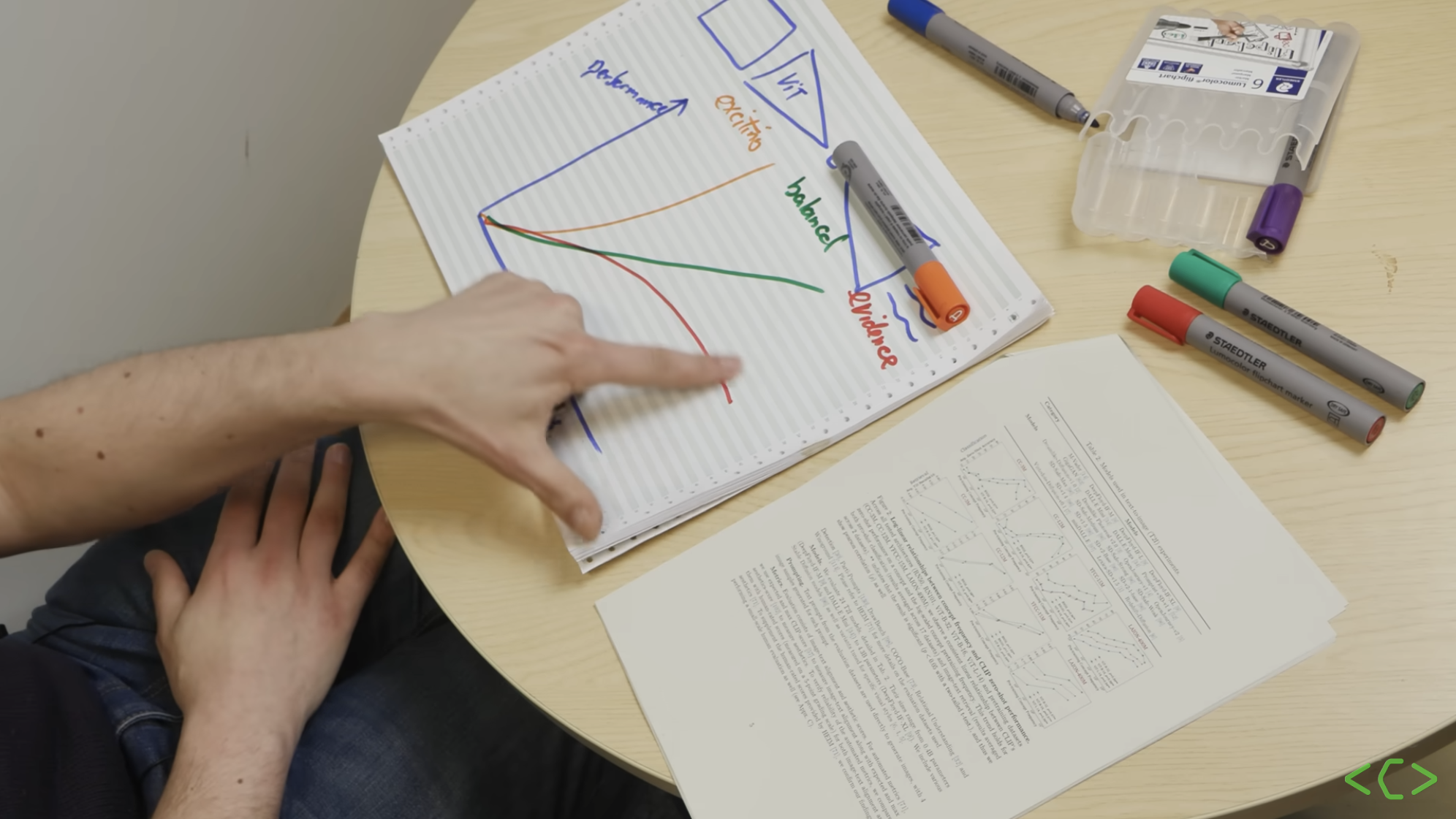

Maybe I’m wrong, as I noted in that article linked at the top. But I end up very similar to this guy at the end of his video, where he indicates that he expects generative AI to follow the red line on the graph rather than the yellow one — but that it would be exciting if it did end up following the yellow one:

We’ll see. We’re still far away from Tesla robotaxis, but let’s see how the tech progresses.

Chip in a few dollars a month to help support independent cleantech coverage that helps to accelerate the cleantech revolution!

Chip in a few dollars a month to help support independent cleantech coverage that helps to accelerate the cleantech revolution!

Have a tip for CleanTechnica? Want to advertise? Want to suggest a guest for our CleanTech Talk podcast? Contact us here.

CleanTechnica uses affiliate links. See our policy here.

CleanTechnica’s Comment Policy