Support CleanTechnica’s work through a Substack subscription or on Stripe.

Every data center on Earth is a silent furnace. The electricity feeding its processors, memory, and storage does not stay as electricity for long. Each calculation, each query, each AI inference, ends as heat. Nothing is stored chemically or locked away as potential energy. The physics is absolute: every MWh that enters a data center leaves as thermal energy. In an era when millions of servers run around the clock, that heat adds up to a global by-product of staggering magnitude. The challenge is not in generating it, but in catching it before it disappears into the sky.

Historically, the industry treated heat as a problem to get rid of, not as a resource. Early data centers vented it into the air with massive HVAC systems. Cooling systems often consumed 20–40% of the total power just to keep equipment within safe operating temperatures. That mindset made sense when computing power was scarce and electricity was cheap. But the rise of hyperscale facilities, artificial intelligence clusters, and carbon budgets is changing the equation.

This hasn’t been a problem in the past as claims of exponential data electricity demand growth have failed to materialize every decade since I first became aware of them as a professional in the technology industry. It might become an actual concern in the age of AI, although I expect economics to drive massive software efficiencies instead of throwing more hardware at problems much more than most analysts expect, something shown by the DeepSeek results and dark data centers in China.

But that said, every data center is a heat opportunity. When a facility uses 100 MW of continuous power, the question naturally arises: could that same 100 MW of heat serve a purpose beyond the server hall?

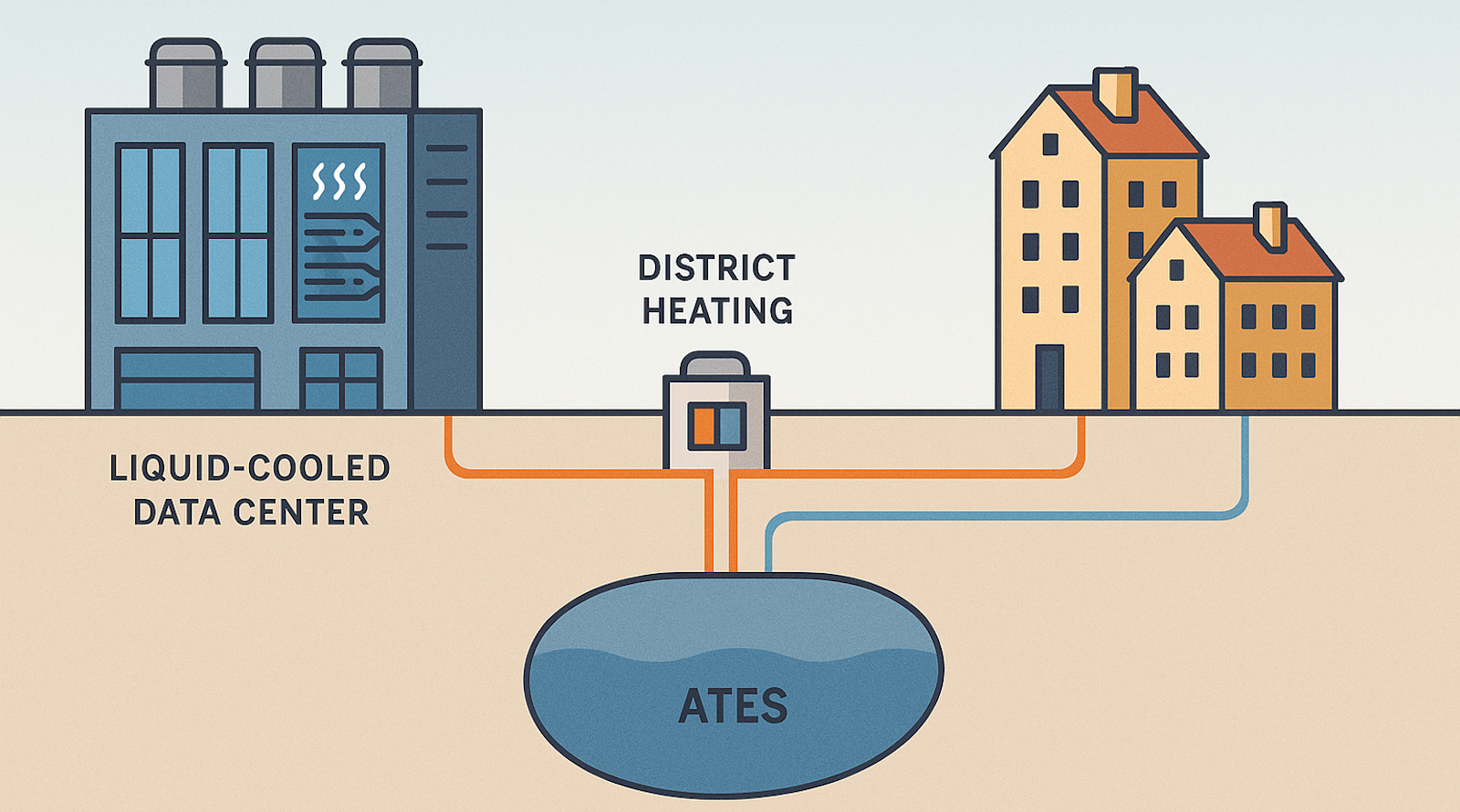

The answer depends on how the heat is captured and where it can go. Air cooling remains common, but air is a poor conveyor of thermal energy. Hot exhaust air leaves the servers at roughly 30–40 °C, too cool for industrial processes and too low in density for efficient transport. Liquid cooling, particularly direct-to-chip and immersion systems, transforms that equation. When servers are bathed in circulating water or dielectric fluids, outlet temperatures can reach 50–60 °C. This opens the door to direct connection with modern district heating networks that no longer require steam-level temperatures. The physics of liquid heat transfer also mean smaller pumping energy and steadier control, which reduce losses.

In northern Europe, where heating networks are dense, this shift is already visible. In Odense, Denmark, the waste heat from Meta’s data center flows through large heat pumps into the local district heating grid, covering roughly 100,000 MWh of residential demand each year. In Finland, Microsoft’s new Azure facilities will deliver 250 MW of thermal output into Fortum’s network, enough to heat a quarter of a million homes. Stockholm, Helsinki, and Oslo have all established programs that treat data center heat as part of municipal energy planning. The projects work because the temperature of the water and the expectations of the grid have converged. Fourth-generation district heating systems operate at 60–70 °C, while fifth-generation systems use low-temperature or ambient loops where each building has its own small heat pump. In both cases, liquid-cooled servers supply heat at useful temperatures with minimal additional energy.

The thermodynamic picture becomes even more interesting when combined with aquifer or borehole thermal storage. These underground systems store heat in the summer when data centers and renewable electricity production peak, then extract it in winter when space-heating demand surges. Low-temperature aquifer systems routinely recover 70–90% of stored energy across a season. When connected to liquid-cooled data centers, they flatten the mismatch between constant computing load and seasonal heating demand. Instead of dumping heat in July, the same water can deliver warmth to homes in January. The technology turns waste into a managed inventory.

Even in the best configuration, not every joule is recoverable. Pumping losses, heat pump operation, pipe heat losses, and maintenance downtime all erode the total. But under ideal integration—liquid cooling, low-temperature district heating, thermal storage, and strong regulatory support—the recoverable fraction can reach 70–85% of the data center’s annual waste heat. In a few tightly optimized campuses where demand is continuous and distances are short, up to 90% is feasible. That means a 100 MW data center could deliver 70–90 MW of continuous community heating. The theoretical limit is higher, but hardware safety margins and variable demand make those figures realistic.

The economics are improving as well. Selling heat has rarely been a profit center for computing firms, yet the avoided cooling costs and carbon accounting value are real. Where natural gas is expensive or taxed, district heating operators are willing partners. Stockholm Exergi’s Open District Heating program pays data centers for their waste heat, offering predictable long-term contracts. In Denmark, regulatory changes removed the waste-heat tax, unlocking projects that had been stalled by perverse incentives. Germany’s new Energy Efficiency Act mandates that new data centers reuse at least 10% of their heat from 2026, rising to 20% by 2028. The European Union’s revised Energy Efficiency Directive requires every facility above 1 MW to assess and, where feasible, implement heat recovery. Policy is catching up to physics.

Social license is another driver. Communities hosting data centers increasingly ask what they receive in return for the power, water, and land these facilities consume. Jobs and taxes help, but a steady supply of low-carbon heat is tangible in a way that ESG statements are not. The sight of homes or greenhouses warmed by server heat softens resistance to expansion. In Norway, a data center’s partnership with a fish farm has become a local point of pride, proving that industrial heat can sustain life rather than waste energy. These gestures matter in permitting processes and in the broader conversation about digital infrastructure and sustainability.

I explored the potential for geothermal cooling of data centers in my recently assembled report on geothermal energy, but realized it was worth exploring this in a bit more detail as a more integrated part of the energy network. This was inspired in part by my work on Ireland’s 2050 energy roadmap and working with Tennet in the Netherlands this summer, where in both cases requiring data centers to provide heat into heat networks of some sort was a key element of the overall solution. Both countries are data center hubs, being seaside with many trans-Atlantic data cables grounding on their beaches, dunes and headlands.

Liquid cooling and next-generation heating networks also fit naturally into a grid dominated by renewables. They transform data centers from passive loads into integrated energy assets. A facility consuming solar or wind power by day and exporting heat into a low-temperature grid by night functions as both digital infrastructure and thermal plant. With aquifer storage, it becomes a seasonal energy buffer, capable of shifting renewable electricity into winter heating months. The same systems that support AI workloads and cloud computing could stabilize municipal energy supply, reducing fossil backup requirements.

The physics make it possible, the technology makes it practical, and regulation is starting to make it mandatory. The barriers that remain are mostly organizational and financial: aligning utilities, municipalities, and hyperscale operators to share infrastructure and risk. But where the pieces come together, the results are persuasive. Recovered heat from servers has already replaced hundreds of millions of cubic meters of natural gas across Scandinavia. The examples demonstrate that the idea is no longer theoretical. It is an emerging design principle for data centers in cold climates and increasingly for urban campuses everywhere.

The story of data center heat reuse is a reminder that energy problems are often problems of perception. For decades, engineers treated waste heat as something to eliminate. Now it is becoming a resource to manage. Each MWh of electricity entering a data center carries two products: digital work and thermal energy. With liquid cooling, Gen-5 networks, and aquifer storage, most of that thermal energy can be put to work again. What was once the cost of computing can become part of the solution to urban heating. The quiet machines at the edge of our cities may yet keep them warm.

Sign up for CleanTechnica’s Weekly Substack for Zach and Scott’s in-depth analyses and high level summaries, sign up for our daily newsletter, and follow us on Google News!

Have a tip for CleanTechnica? Want to advertise? Want to suggest a guest for our CleanTech Talk podcast? Contact us here.

Sign up for our daily newsletter for 15 new cleantech stories a day. Or sign up for our weekly one on top stories of the week if daily is too frequent.

CleanTechnica uses affiliate links. See our policy here.

CleanTechnica’s Comment Policy