Sign up for daily news updates from CleanTechnica on email. Or follow us on Google News!

A few months ago, Sami Khan, a Simon Fraser University Professor and MIT engineering PhD, reached out to me. He’d read something I’d published on ocean geoengineering and wanted to know if I was interested in talking with his PhD, master’s degree, and undergraduate students about the subject, and carbon capture, utilization, and sequestration as a guest lecturer in a CCUS co-op course he was running.

I checked to make sure that he knew how bearish I was on carbon capture in general, and he did, and wanted to expose his students to me regardless. Recently the date arrived and I spent three hours with Khan and his students. First, a bit of background on the program.

Khan was one of the first faculty members of Simon Fraser University’s Sustainable Engineering Program at the Surrey campus and had the opportunity with early colleagues to shape it. It’s a forward-thinking initiative designed to address the pressing environmental challenges of our time through innovative engineering solutions. Established with a vision to integrate sustainability principles into engineering education, the program focuses on the development of technologies and systems that promote environmental stewardship, energy efficiency, and sustainable development. Students are equipped with interdisciplinary knowledge and skills, preparing them to design and implement sustainable engineering practices across various sectors. The curriculum emphasizes hands-on experience, critical thinking, and problem-solving, ensuring graduates are well-prepared to lead in the transition towards a more sustainable future.

I’m always eager to spend time with extraordinarily bright and educated people and be challenged by them, but this was something more for me. As I’ve said many times, engineers are a finite resource and it’s easy for them to be seduced into doing technically interesting but ultimately worthless work. The engineers working on urban air mobility, hydrogen transportation, gravity storage that isn’t pumped hydro, and a host of other things could be moving the needle instead. And carbon capture and storage is among the biggest time and energy wasters going. I hoped to help these engineers, who are just starting their careers, pick wisely.

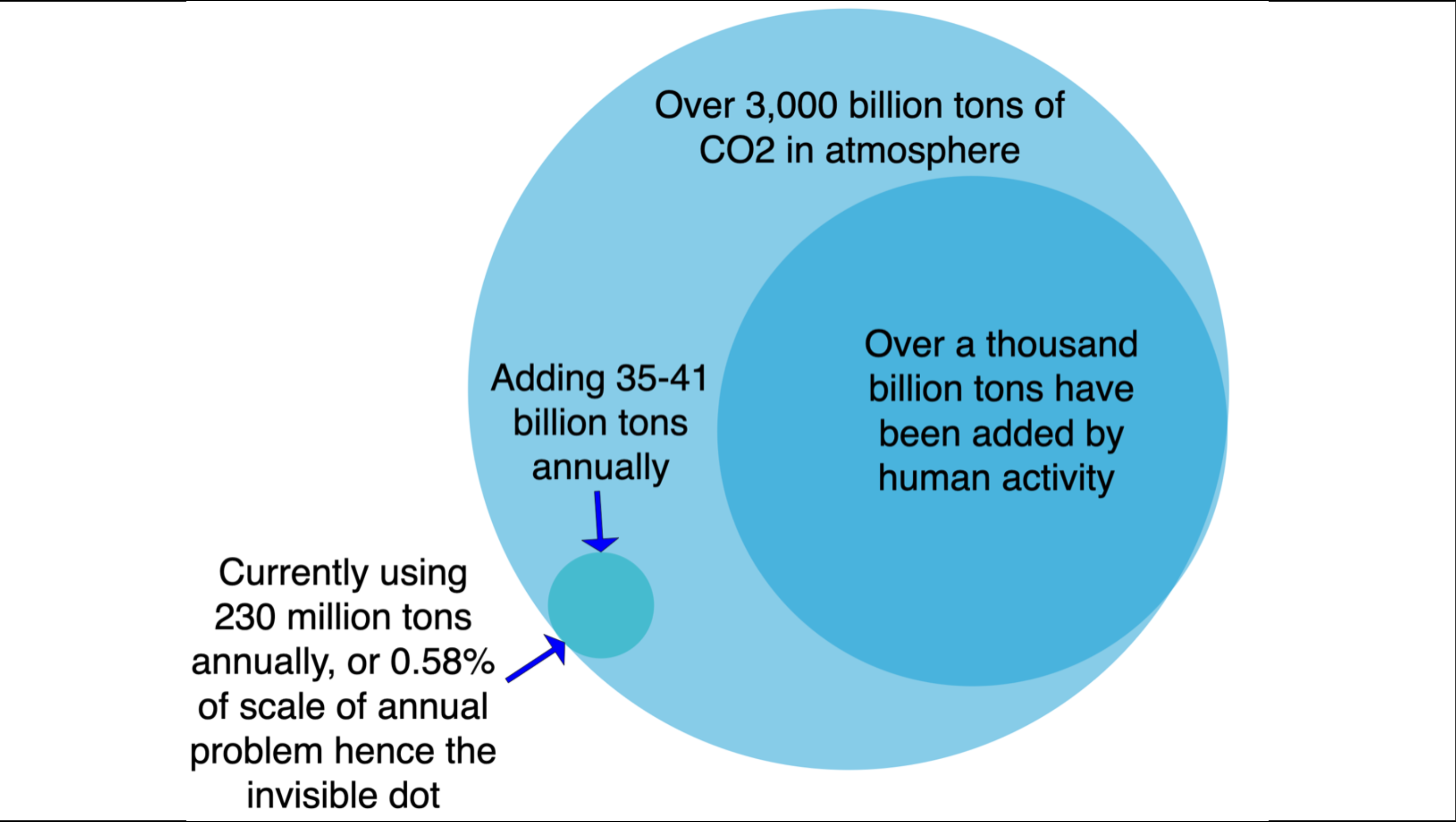

What’s with the Venn diagram? Well, that’s the scale of the CO2 in the atmosphere right now, with the scale of our historical additions and the scale of our annual additions. There are about 3,000 billion tons of CO2 in the atmosphere right now, and we put a third of it there since the beginning of the Industrial Revolution by burning fossil fuels.

The invisible dot is our current annual consumption of CO2 as a commodity. The single biggest market for CO2 today is enhanced oil recovery, over a third of the 230 million tons that somebody pays for every year.

Any purported solution has to start with the reality that we are adding tens of billions of tons of CO2 a year to the atmosphere, and the total overage to considering drawing down is around 1,000 billion tons. Materiality means something that can scale to 1% of the 1,000 billion tons, or 10 billion tons in total.

An analogy I use is trying to get horses back into the barn after the door has been left open and they’ve wandered away. Except there are trillions of horses, they are microscopically small, they are invisible, and they have wings. It’s vastly more efficient to not let them escape in the first place.

That means that solutions that can achieve a million tons a year or ten million tons are simply immaterial. Even 100 million tons a year just isn’t all that. They aren’t relevant, but instead are a distraction. Who benefits?

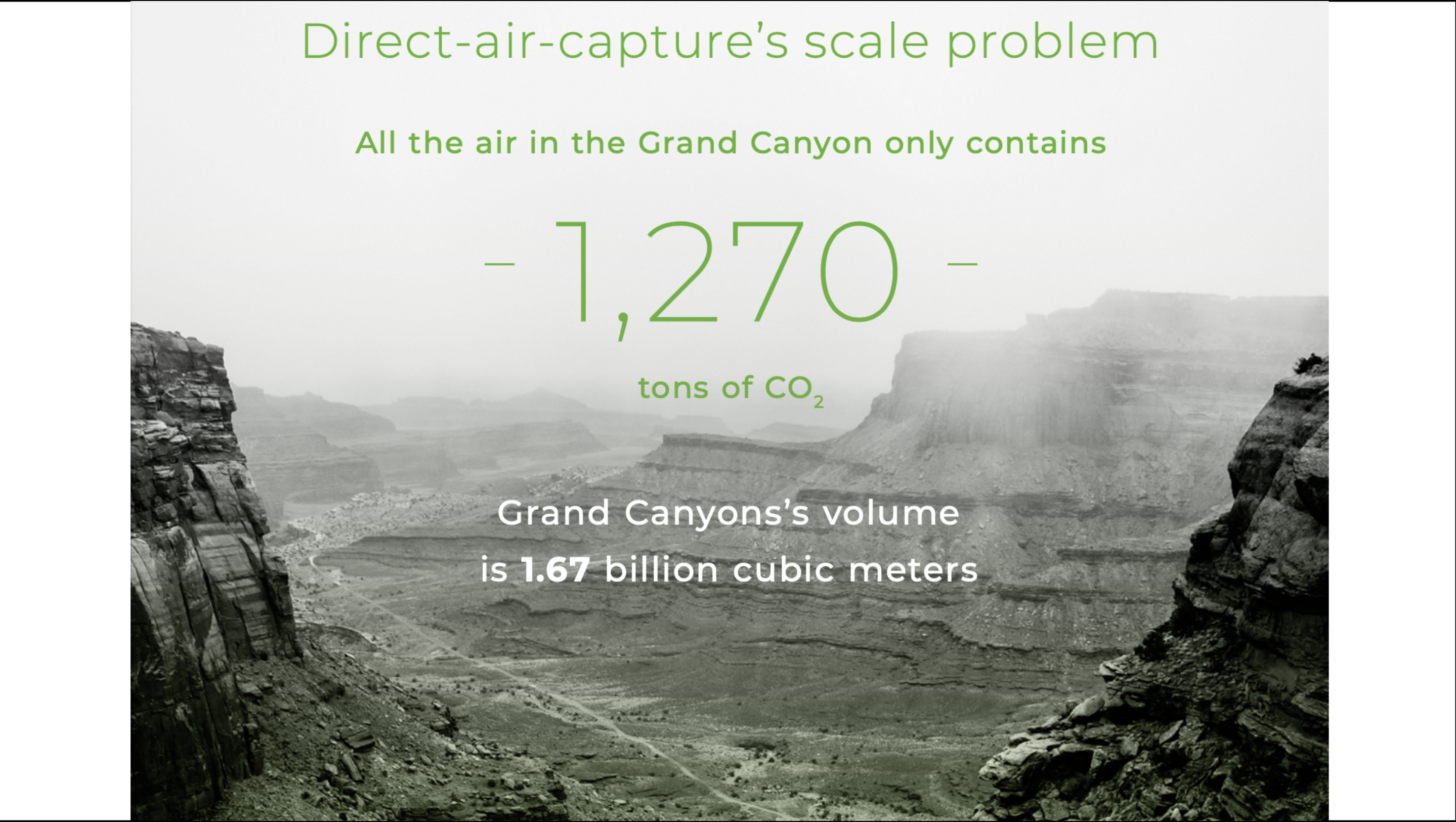

That leads to the problems with mechanical direct air capture, starting with the sheer volume of the air that needs to be moved in order to get a ton of carbon dioxide. For sports fans, think of the Houston Astrodome, one of the biggest sports stadiums on the planet, which can hold up to 67,000 people. It’s huge.

The entire thing contains only 1.1 tons of carbon dioxide in all of the air inside of it. That’s at 100% efficiency of capturing the CO2 out of the air. Modern DAC technologies generally have capture efficiencies ranging from 70% to 90%. To get to a non-material million tons a year, we’d have to multiply the Houston Astrodome until it was bigger than New York or Tokyo, two of the biggest cities in the world.

A hundred million tons, getting into sight of materiality, would require a hundred of Tokyo’s or New York’s areas covered in Houston Astrodomes. Getting a sense of how absurdly big the atmosphere is, and how little carbon dioxide is in it?

Getting a sense of how much air has to be moved just to be able to capture a material amount of CO2?

Enter a solution with a wall of fans 2 kilometers long, 20 meters high, and 3 meters deep. That’s sufficient to capture a million tons of carbon dioxide a year, which as noted above, is far below the scale of materiality.

Have humans built any machines that are two kilometers long? Not really. Pipelines, roads, and a couple of bridges are that long, but they are mostly inert objects with incredibly few moving parts.

The Great Wall of China is a lot longer, but it just sits there and it’s only 6 to 7 meters tall. Zero moving parts, zero technology and shorter. If the entire Great Wall were turned into one of these solutions, it would be three times taller, a bit thinner, and be able to capture perhaps 12 million tons of CO2 a year. And 12 million tons doesn’t get to materiality. Oh, and an enormous number of moving parts between the fans, the liquid pumps, the heat system, and the like.

I assessed this solution 5 years ago, rebuilding my brain into a cheap imitation of a chemical processing engineer’s. The facsimile and effort was good enough to win me a bunch of nerdy, PhD-laden, chemical engineering fans. Not remotely enough to make me a chemical processing engineer, but at least they respected the effort.

The solution uses liquid containing potassium hydroxide. When air is pulled into the system, the CO2 reacts with this chemical and gets trapped. Next, it adds another chemical, calcium hydroxide, which helps release the trapped CO2 and clean the liquid so it can be used again. To get the CO2 out, they need to heat the mixture to a very high temperature, about 900 degrees Celsius (1,652 degrees Fahrenheit).

The original peer-reviewed paper for this solution said it was going to be powered by natural gas and that a couple of additional carbon capture solutions were going to be bolted on to deal with the emissions from burning the gas. Every ton of CO2 captured from the atmosphere was going to create another 0.5 tons of CO2 from carbon that was previously sequestered.

I realized at the time that the only natural market for this kind of technology was enhanced oil recovery. When 40% of the market for CO2 was enhanced oil recovery, it wasn’t a big leap. When US shale oil was also leaking enormous amounts of unmarketable, unwanted natural gas, it really wasn’t a big leap.

The venture capital concept of a natural market refers to a business finding its ideal customer base naturally, without needing heavy marketing or forced efforts. In this case, it wasn’t dealing with climate change, it was getting more oil out of the ground.

A couple of months after I published that prediction, the firm found its first client, an oil and gas major that was going to build a facility on the Permian Basin to use unmarketable natural gas to power the system to capture CO2 to pump underground into tapped out oil wells to get more oil out. When I ran into one of their engineers at a conference, they let slip that they were receiving $250 per ton of CO2 that they pumped underground from a couple of different governmental programs.

When a ton of CO2 is pumped underground, it unlocks a quarter to a ton of oil, which when used as directed releases up to three tons of CO2 into the air. This is not remotely a climate solution. Not that long ago, the firm was bought outright by the oil and gas major that was its first and likely only customer.

This is foreshadowing, a valid and respected literary device, in case anyone is keeping track of such things.

A firm is exploring mineral weathering as a beach sand supplementation scheme, one of many thought experiments about spreading finely crushed olivine or other minerals which react with atmospheric CO2 over absurd expanses. Despite various mineral weathering approaches, none have withstood scrutiny when faced with key questions about sourcing, logistics, efficiency, energy use, environmental impact, and cost. None have been deployed outside of tiny trials.

This one focuses on olivine, a common magnesium iron silicate abundant in the earth’s crust. When exposed to the atmosphere, olivine reacts with carbon dioxide to form magnesium carbonate. One ton of finely crushed olivine can absorb a ton of CO2. This approach, first mentioned in a 1990 Nature publication, has not yet been commercialized despite ongoing academic interest and venture capital funding for related startups.

The firm aims to mine olivine, grind it into sand, and use it to replenish storm-eroded beaches, hoping to gain community support over time. However, practical and ecological concerns remain. Olivine’s weathering process is slow, taking about 100 years to reach 70% efficacy for grains smaller than a millimeter, which is to say, in the range of sand.

Olivine’s green hue, while distinctive, poses challenges. Communities value their beaches’ current appearance and texture, which olivine sand would alter significantly. Economic considerations are significant. Beach tourism generates substantial revenue, and the cost of beach replenishment is often covered by cobbled-together public funds. In Florida, beaches are vital to the economy, with one study I read suggesting over 50% of Florida’s annual GDP was related to beaches, and changing their sand composition could reduce their appeal.

There is a single green beach in the world which is a tourist attraction — one in Hawaii where the locals have somewhat blockaded the road to it in order to extort more money out of tourists. Then there are three other beaches, mostly in colder parts of the world, which have patches of green sand. Not exactly beach meccas.

Widespread adoption of green beaches is unlikely and the economics are deeply unlikely to work out. The project has secured $1.6 million in grants and is seeking Series A funding, primarily supported by a community of academics studying olivine weathering. Even with optimistic projections, potential carbon drawdown remains a fraction of current annual emissions. Once again, materiality matters.

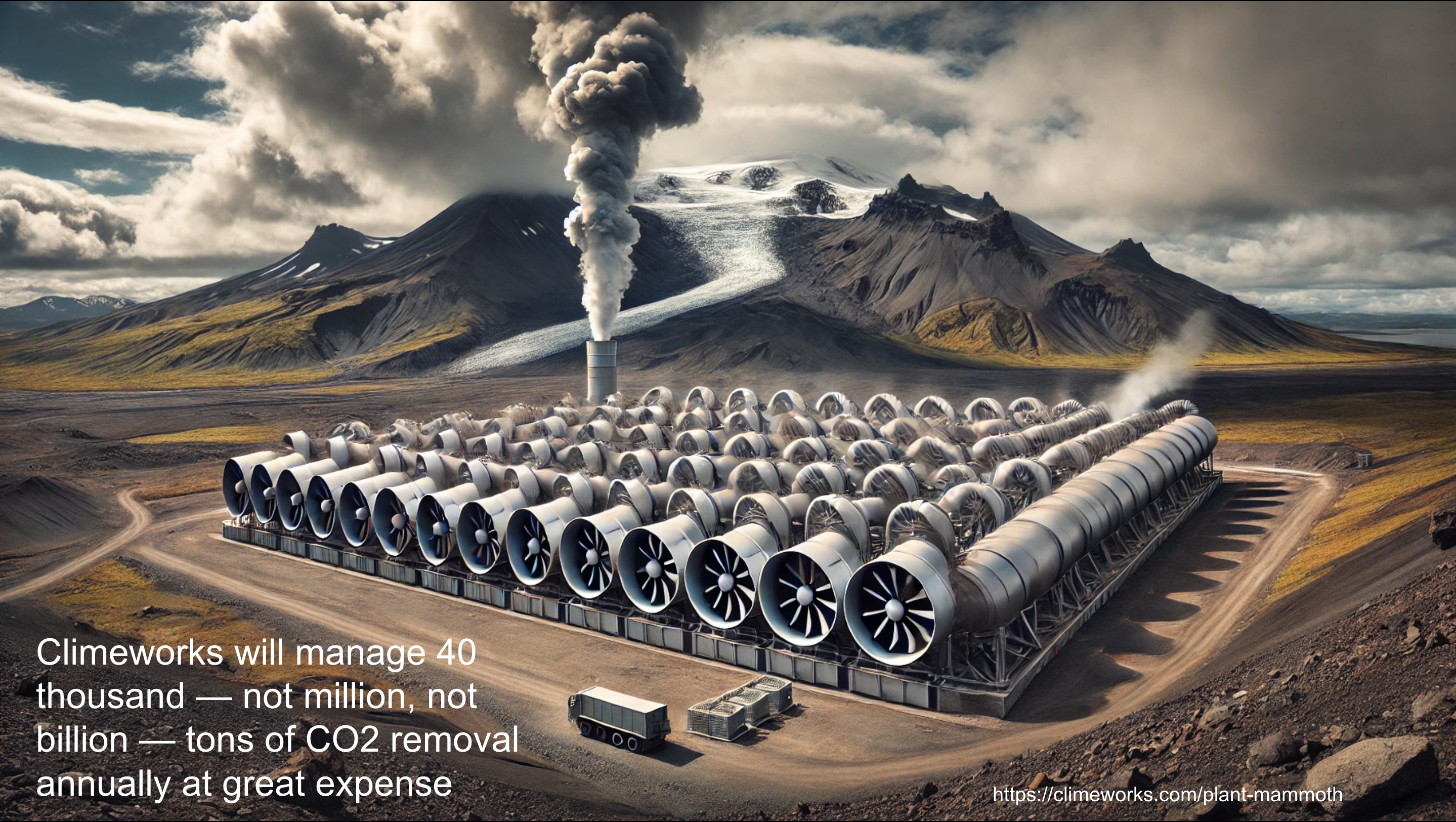

In Iceland, a tiny pair of direct air capture facilities have been built near Reykjavik. The process involves large fans drawing ambient air into collectors, where a specially designed filter material traps the CO2. Once the filter is saturated, the CO2 is isolated through a heating process, releasing it from the filter for subsequent use.

After capturing the carbon dioxide, it is stored by mixing it with water and injecting it deep into the basaltic rock formations found in Iceland. Through a natural mineralization process, the CO2 reacts with the basalt and solidifies into stable carbonate minerals within two years, effectively locking it away and preventing it from re-entering the atmosphere.

The facility currently captures around 40,000 tons of CO2 annually, after the ‘big’ expansion. Note, thousands, not even millions, never mind billions. This is a rounding error on an emaciated gnat’s thorax.

And an expensive rounding error. The cost of capturing CO2 with this technology is estimated to be around $600 to $800 per ton. That cost isn’t going to go down, because it’s really basic stuff not amenable to the levers that have driven down computing power, wind turbine, battery, and solar panel cost.

Let’s pivot from the dead end of air carbon capture to the challenged and wetter end of oceanic geoengineering and carbon sequestration.

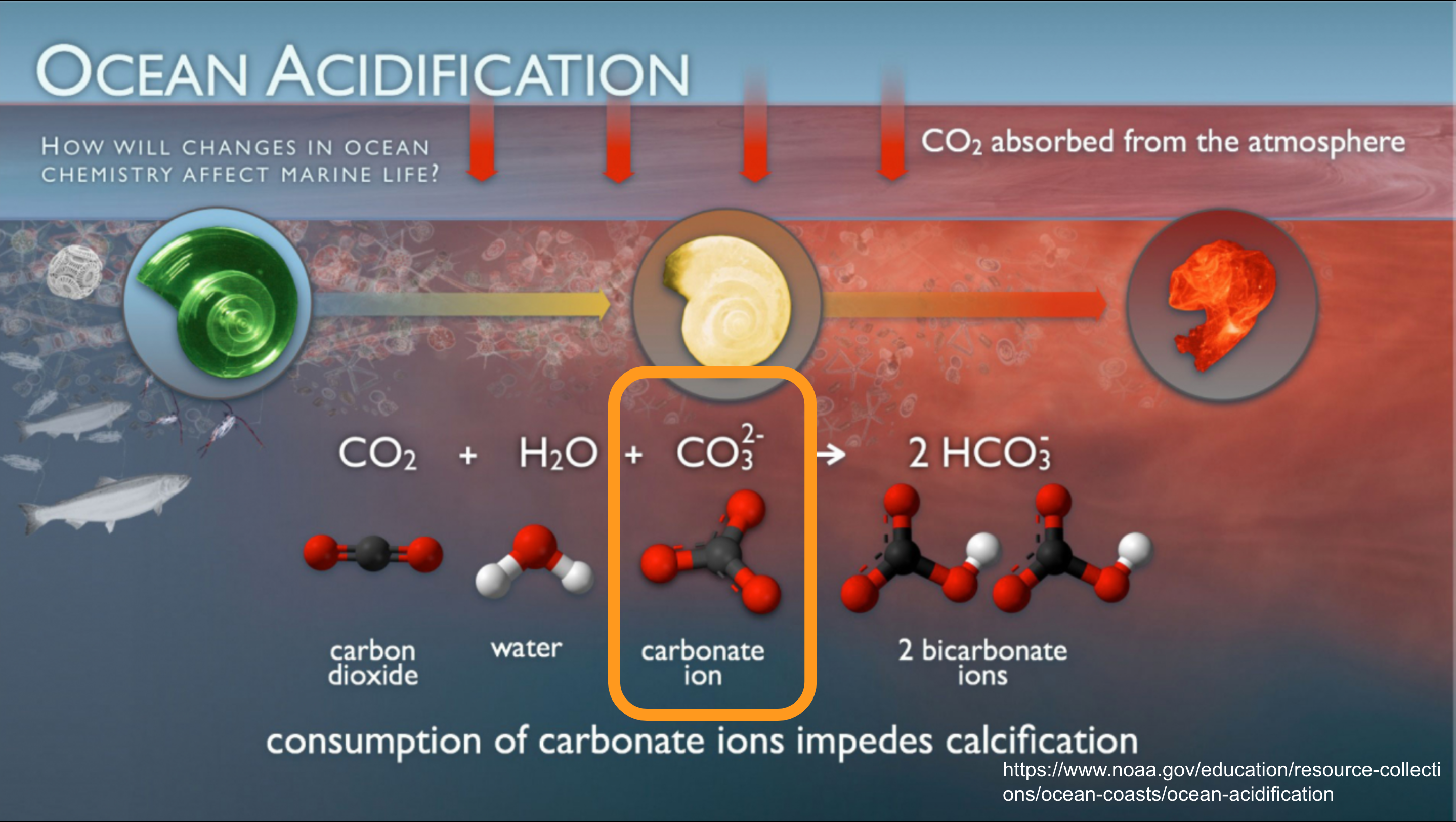

When the ocean absorbs carbon dioxide from the atmosphere, a series of chemical reactions occurs, significantly impacting marine ecosystems. Initially, CO2 dissolves in seawater, forming carbonic acid. This acid dissociates into hydrogen ions and bicarbonate ions, and the hydrogen ions can further react with carbonate ions to form more bicarbonate. This shift in the carbonate ion to bicarbonate ion balance reduces the availability of carbonate ions, which are crucial for shellfish and other marine organisms that rely on them to build their calcium carbonate shells and skeletons.

The process is referred to as ocean acidification, lowering the pH of seawater and reducing its alkalinity. This process not only threatens the structural integrity of marine life, but also diminishes the ocean’s capacity to absorb CO2 over time, potentially accelerating the rate of climate change. It’s called oceanic acidification, but really it’s lowering alkalinity and sucking away mollusk shell building blocks.

For a bit more useful context, about 90% of the carbon in the ocean is locked up in bicarbonate ions. About 9% is in carbonate ions — the ones that shellfish need — and about 1% is dissolved CO2. These ratios are important, as getting CO2 out of ocean water means breaking down the bicarbonate and carbonate ions, and putting more CO2 into ocean water means decreasing the carbonate ions.

A different firm tried to use magnesium hydroxide, commonly referred to as milk of magnesia and used as an antacid. When introduced into seawater, magnesium hydroxide bonds with the carbonic acid from absorbed CO2, forming magnesium carbonate and water, thus preventing carbonate ions from being turned into bicarbonate ions and reduced ocean alkalinity. This keeps ocean alkalinity stable and doesn’t eat up the carbonate ions shellfish need for their shells.

However, the technique does not directly remove atmospheric carbon dioxide. Keeping ocean alkalinity from declining keeps the ocean’s ability to absorb CO2 up, around a bunch of other factors that impact absorption, with a biggie being temperature.

The firm secured significant funding, including grants and the prestigious $1-million, Musk-backed CDR XPRIZE. Part of that is because they had a California PhD who has been publishing about this for decades as their scientific officer. With the money, they built two pilot plants which they operate.

It’s remarkable that they got a lot of money, awards, and pilot plants because of some basic stuff it took me about 25 minutes to figure out from scratch after hearing about the technology.

The first big one was that the process of manufacturing magnesium hydroxide carries a carbon debt greater than the oceanic carbon drawdown improvement. There is no climate win when the raw materials required have a big carbon debt. Full lifecycle carbon accounting is required for any solution, and this one fails that sniff test.

The second big one is that the raw material, magnesium hydroxide, is expensive. We’ve been manufacturing it for centuries and are doing it as cheaply as it’s ever going to be done. The end to end costs, even if there was net carbon drawdown, would have been hundreds of dollars per ton.

The third is that we don’t really have a good handle on what a lot of magnesium carbonate would do in ecosystems. It’s assumed to be relatively benign, but that’s different than being confirmed to be benign.

All three of these are trivial to determine from public data sources today, and research in the 1990s established the data. This has obviously been a dead end for approaching 30 years, yet academics and now this firm have attempted to implement it, and run full speed into the brick wall of the reality.

The carbon debt and economic failures of the approach mean that after winning prizes, getting investment, and building pilot plants with its proposed solution, the firm is pivoting to exploring the use of mining waste to produce alkaline substances to dump into the ocean instead. That way lies a lot more red flags.

A California-based carbon capture company has raised over $33 million to capture CO2 from seawater. Its method involves pumping seawater, splitting a bit off to make it much more acidic with electrolysis and a proprietary membrane, then combining it back into the main stream. This dissolves the carbonate ions and bicarbonate ions, turning them into CO2. They bubble the CO2 out of the water with standard vacuum gas separation techniques, then rebalance the alkalinity of the water before returning it to the ocean.

Sharp eyes will have noticed the bit about turning carbonate ions into CO2 and removing them from the seawater. Yes, this process would remove shellfish-essential ingredients from the seawater. This is a local process, so can be managed where there is a strong side current bringing new ocean water past the facility every hour and no down-current shellfish life to speak of.

Another issue lies in seawater’s complexity. Rich in life, salts, and minerals, seawater can cause rapid fouling of the system’s proprietary membranes, which are both expensive and challenging to maintain. Additionally, the process of extracting carbon dioxide from seawater is energy-intensive and inefficient. The firm would need to process billions of tons of seawater annually to capture one million tons of CO2.

Just raising that much heavy seawater the likely five meters would be energetically expensive, never mind the energy for the process itself. They also have to do something with the CO2 itself, and more on that later. The energy required for this process — tens of GWh annually — would be better used in powering homes, electric vehicles, and industrial operations, significantly reducing emissions more directly.

And just to drive the nail home, the biggest problem with ocean acidification is removing the ingredients shellfish need, and this doesn’t solve that.

Another startup’s process involves capturing CO2 from an external source and mixing it with seawater. It uses electricity to alter the seawater’s electrochemistry, accelerating the conversion of CO2 to carbonic acid, which then reacts to form bicarbonate ions while depleting carbonate ions. This treated water, now heavy with bicarbonate ions, is returned to the ocean.

The pilot plant in Singapore will sequester 100 kilograms of CO2 daily in seawater, and produce low-carbon hydrogen if powered by green electricity. The plant’s process is essentially hydrogen electrolysis, which could produce 300 kilograms of hydrogen by mixing CO2 with seawater. Their recent paper indicates they can sequester about 4.6 kilograms of CO2 per cubic meter of seawater, meaning sequestering a ton of CO2 would require around 217 tons of seawater. The CO2 has to be pure, of course, otherwise complications of both chemistry and ocean biology arise.

Of course, what they are doing is electrolysis of salty water, which is the chlor-alkali process which makes chlorine, a deadly poisonous gas. How they get around that isn’t described. Regardless, the process depletes the carbonate ions crucial for shellfish.

Do they mention shellfish in their literature? Do they mention chlorine and its dangers to humans and aquatic life? Do they have a biologist on staff? No, no, and no.

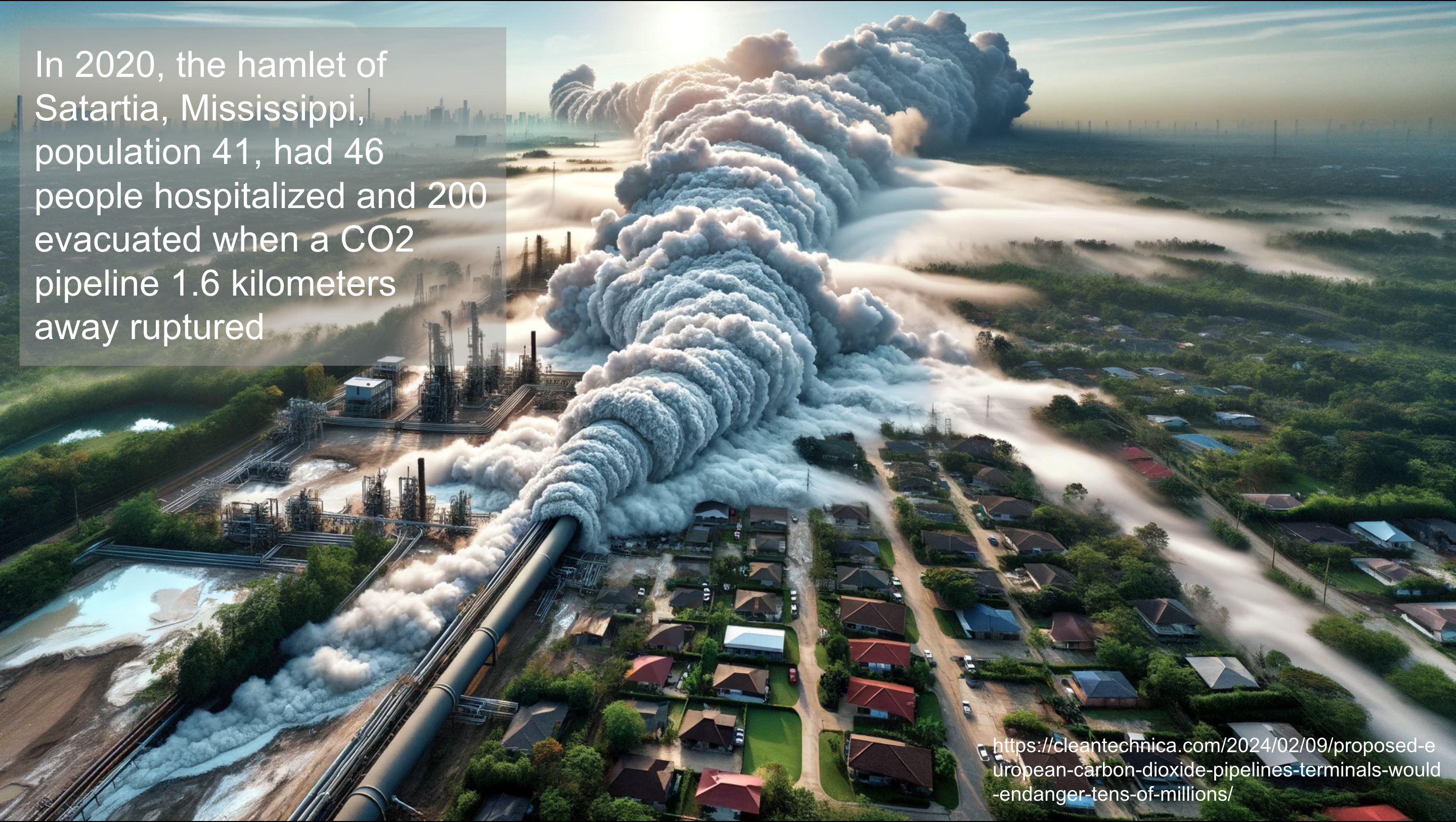

Speaking of pure CO2, let’s talk about Satartia, Mississippi. It’s a tiny village of 41 residents in western Mississippi. In 2020, a CO2 pipeline 1.6 kilometers away that transported the gas to an enhanced oil recovery facility — there’s that phrase again — breached because of a minor landslide.

No big deal, you think, CO2 is a gas and it will just disperse. Not so fast. A lot of CO2 pipelines use liquid phase CO2 because it’s a lot easier to pump an incompressible and much denser fluid than a compressible and much more voluminous gas. That’s what was in the Satartia pipeline, liquid CO2. When the pipeline burst, the liquid turned rapidly into a gas, about 590 times the volume as the liquid.

No big deal, you think, the resulting CO2 is a gas and it will just disperse. Not so fast, yet again. CO2 is heavier than the nitrogen and oxygen in our atmosphere, and so it will roll downhill in a thick blanket and settle into depressions, dispersing only after hours. Think of dry ice machines, which use frozen CO2 to create a layer of fog along dance floors or in sporting events.

The pipeline was uphill from Satartia.

The blanket of CO2, meters or tens of meters thick, rolled downhill, across an intervening highway where it left people who had pulled their cars into a rest stop unconscious and thrashing. It rolled over Satartia, where it left everyone in town unconscious and thrashing on the ground or in their homes. They saw it coming, as it was tinted a bit by the presence of trace elements of hydrogen sulfide, the poisonous gas that smells like rotten eggs, but in too small amounts to be harmful.

So why was everyone left unconscious and thrashing? Why didn’t they just drive away? Humans can’t breathe too much CO2. In amounts as low as a thousand parts per million, minor cognitive impairment starts to be detectable. At 2,500 parts per million, we are reduced to the intellectual capacities of kindergarten children. At 30,000 parts per million, we’re at immediate and serious health risk. And internal combustion cars don’t work without oxygen either. People’s cars wouldn’t start and emergency responders couldn’t get into the area. A couple of hundred people were evacuated and 46 hospitalized.

Hours later, CO2 at levels of 30,000 parts per million were still present in some rooms in buildings in the village.

What does this have to do with carbon capture in general?

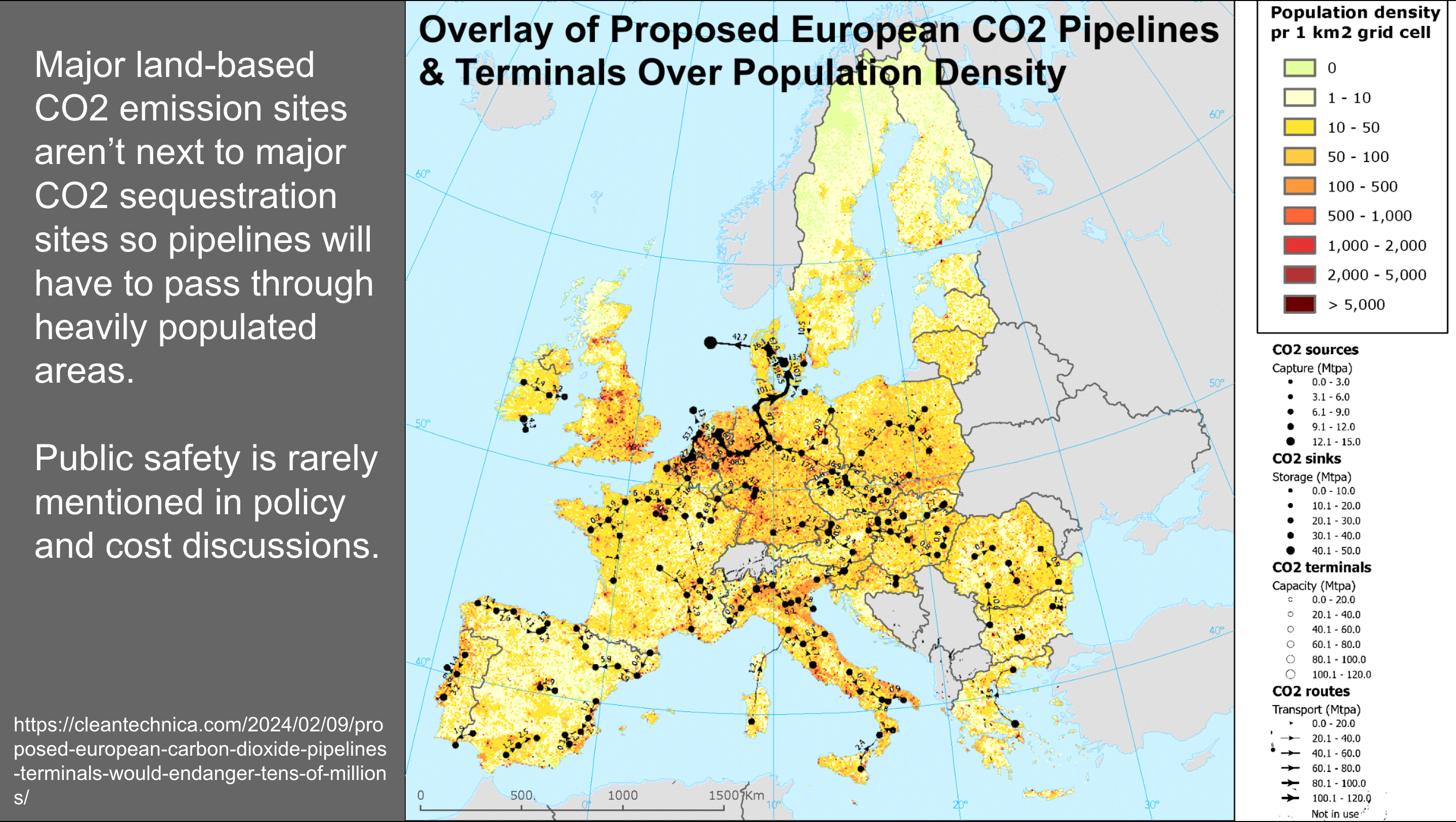

Earlier this year, I put this graphic together as part of a discussion related to CO2 pipeline safety concerns. I took a population density map of Europe and one of the proposed CO2 pipeline, terminal, and sequestration maps from an EU document related to carbon sequestration, and overlaid them.

What you see as you look at the map is that we make a lot of carbon dioxide from various processes where there are a lot of people. Europe is almost entirely more densely populated than western Mississippi. CO2 pipelines today are almost entirely for enhanced oil recovery in the southern United States, in regions that are sparsely populated.

Any climate strategy that relies to any significant extent on carbon capture and sequestration will have a lot of CO2 pipelines running through densely populated regions. The safety concerns are obvious.

There are mitigations, but all they do is make the solution quite a bit more expensive than claimed. All pipelines through even moderately populated areas will have to be gas phase. They’ll be much bigger and hence more expensive for the same mass of CO2 traversing them. They’ll require section shut-offs probably every kilometer to limit the releases of CO2. They’ll have quite expensive liability insurance.

Even then, when it comes to local acceptance of CO2 pipelines, Satartia will come up again and again and again, leading to massive opposition to them. Not in my backyard, will be the quite reasonable cry.

Why is NIMBY reasonable in this case and not others? Because concerns about wind turbines, transmission lines, and solar farms are overblown nonsense, while concerns about massive CO2 leaks are quite real. Because wind turbines, transmission lines, and solar farms stop carbon dioxide from being emitted vastly more cheaply than trying to put all of those trillions of invisible flying horses back into the barn.

Equinor’s Sleipner facility is often touted as a complete success story for carbon sequestration, putting millions of tons a year into long term sequestration under the North Sea.

That’s as big a myth as the name, which comes from Norse mythology. It was Odin’s creepy eight-legged spider horse that Loki bore after transforming into a mare and seducing the horse of a master builder who was erecting Asgard’s walls as part of some cunning plan.

Why is it as big a myth? Because all the facility does is take natural gas with too high a ratio of CO2 out from under the North Sea, strip off the CO2, and put it back under the sea bed. When the natural gas is used as intended, burning it releases about 25 times as much CO2 as was sequestered. Sleipner eliminates about 4% of the CO2 emissions from the facility. That’s not a big win, that’s a tiny improvement.

To be clear, Norway is a leader in ensuring that methane doesn’t leak from its facilities, unlike the USA, which is a global leader in leaking methane. And the Sleipner facility is the cheapest carbon dioxide sequestration facility from a capital and operating cost in the world, mostly because so much of it was required just to get the natural gas out.

Why does Equinor do this virtuous act? Because the government pays iit to. Last time I estimated it, the company had received over a billion dollars for not using the atmosphere as an open sewer.

Are there any other fossil fuel majors which are asserting sequestration virtue that doesn’t hold up to scrutiny? Certainly, ExxonMobil, famous for its internal scientists realizing that climate change was real, serious, and caused by Exxon, telling management that, and Exxon then muzzling the scientists and funding global climate denial and disinformation campaigns.

It claims to have the best CO2 sequestration facility in the world with its Shute Creek site in the USA. What does that site do? It extracts natural gas with too much CO2 (sound familiar?), strips off the CO2, and then puts it in a pipeline to be transported to nearby oil fields, where it’s used for enhanced oil recovery. That is, when the oil firms are willing to pay for the CO2. Otherwise they just vent it to the atmosphere. And, of course, every ton of CO2 used in enhanced oil recovery usually results in a lot more CO2 from the oil that comes up due to its use, and all of that natural gas both leaks and turns into CO2 when used.

There’s nothing virtuous about ExxonMobil’s ‘leadership’ in CCS. It’s a shell game.

Recently Canada passed Bill C-59, which requires firms promoting sustainability claims to be both factual and to be able to back up their claims with third party validation. Naturally the oil and gas industry took this as a frontal assault. Also naturally, all three major fossil fuel propaganda and lobbying groups took all of their sustainability claims off of social media and the web. That meant removing all of their media announcements as well. Why did they feel the need to do this? Because their claims were specious nonsense, they knew it, they knew that they couldn’t defend them, and Bill C-59 has teeth with 3% of revenue to a $15 million cap per offense. That could turn into big money very quickly.

What about the idea of bolting carbon capture onto fossil fuel electrical generation plants? Well, that’s been tried too. In Saskatchewan, the utility added carbon capture technology to the Boundary Dam coal plant. It operated for a handful of years. The results?

Very expensive wholesale costs of electricity, about $140 per MWh, far above wind and solar firmed by transmission and storage. That’s even with the inevitable enhanced oil recovery with the captured CO2.

The utility is on record as saying that it is never going to consider that again.

Perhaps it is an outlier? No.

The Petra Nova coal plant in the southern USA added a carbon capture rig to one of eight boilers at the facility. Then they added a natural gas cogeneration plant to provide the electricity and heat for the carbon capture process. It required 15% to 20% of the energy generated by the coal plant to separate the CO2 out of the flue gas, clean it up, and compress it.

The plant managed to get up to 92% capture rates when the requirement is much closer to 100%, and CCS advocates claimed victory. In order to approach 100%, another carbon capture technology entirely would be required to process the output of the first carbon capture technology, so things would just get more energetically nonsensical. Of course, the methane-powered cogen plant was using what methane arrived at it and didn’t leak, and had methane slippage of its own. Further, the CO2 from the cogen plant was not captured either. The 92% is far worse than that in reality when the full end-to-end CO2e emissions are considered.

This was very expensive as well, about a billion dollars, even with governmental grants, tax breaks, and some revenue from enhanced oil recovery. The Petra Nova facility is conspicuously not expanding the carbon capture program to the other boilers.

A few years ago, I looked at all of the CCS sites in the world claiming to sequester a million or more tons of CO2 a year. I used the Global CCS Institute’s public data for this. What’s the GCCSI? It’s a lobbying and propaganda group funded by the oil and gas industry.

Unsurprisingly, the data set was missing a lot of relevant data. It had the site, the location, and the claimed annual sequestration. It didn’t have the capital costs, operating costs, where the CO2 was coming from, or whether the CO2 was used for enhanced oil recovery.

I went out and found all of that data from other public sites to assemble a robust enough data set. I initially made the generous assumption that all CO2 was captured, and so only adjusted claimed sequestration for enhanced oil recovery, for which I made the generous assumption of low enhanced oil recovery.

The projects went back to the 1970s, so I determined how much money had been spent each decade. Then I asked the simple question about how much carbon avoidance that money could have bought if it were spent on wind and solar instead. After all, each MWh of renewably generated electricity pushes a MWh of fossil fuel generated electricity off of the grid, avoid the emissions from it. I adjusted generation for historic capacity factors as well.

With all of these benefits of the doubt, the money spent on CCS would have avoided three times as much CO2 as was sequestered by these facilities over their lifetime to the date of my analysis.

And, of course, as the case studies show the majority case is actually CO2 that was already sequestered being pumped up from underground and put back again for enhanced oil recovery or federal tax breaks. When the CO2 was previously sequestered, claiming virtue in extracting and re-sequestering it is like walking up to a garbage can on the street, kicking it over, then righting it and refilling it and demanding to be commended for your civic virtue.

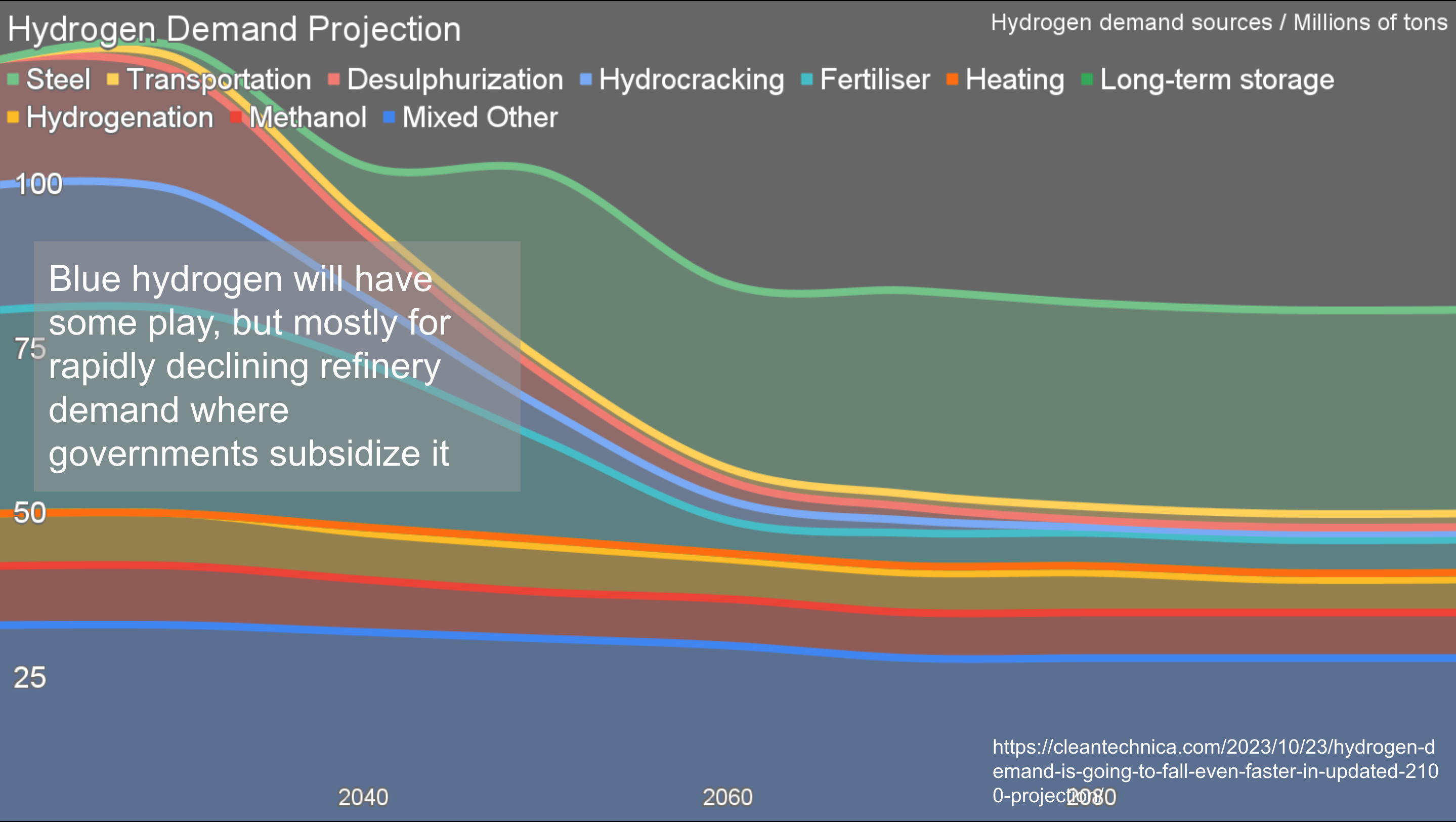

There are places where carbon capture will end up penciling out as the optimal choice, but they are few and far between. One of the great hopes is for hydrogen. This is the most recent iteration of my demand projection through 2100 for hydrogen.

One thing to remember is that hydrogen is a greenhouse gas emissions problem on the scale of all aviation globally right now, over a billion tons of CO2e annually. That this huge climate problem is being touted as a climate solution is a bit of a head-scratcher.

Even the dimmest eyes will note that I project that demand is going down, not up. That’s because I’ve looked at all of the existing and purported demand sectors for hydrogen and have a good sense which direction they are heading. Ground transportation is just going to electrify, and that’s a huge demand area for fossil fuels today. Electrical generation is going to be renewables, transmission and batteries, with hydrogen being an expensive and lossy loser in the competition. Biofuels are completely fit for purpose for the parts of aviation and maritime shipping that won’t electrify and will still persist. After all, 40% of bulk shipping is of fossil fuels as cargo, and that’s going way.

Further, the biggest current demand segment for hydrogen today is from oil refineries. It’s used to take impurities, especially sulfur, out of crude oil, and to crack it into lighter and heavier fractions. The heavier and higher sulfur the crude oil, the more hydrogen is required. I estimate that 7.7 kilograms is required for a barrel of Alberta’s crude oil. That hydrogen has to be decarbonized to decarbonize oil and gas production, so it’s going to be blue or green hydrogen, both of which are more expensive.

That means that the quality discount for Alberta’s and Venezuela’s products are going to go up just as oil demand is flattening and declining with peak oil. The world will be awash in light, low-sulfur oil, so heavy, high-sulfur oils won’t be economically competitive. That means that refinery demand for hydrogen will plummet much faster than oil refining will decline.

In other words, blue hydrogen will be used in refineries, but it has a short demand lifespan. As a result, the related CCS will be limited too. There will be some, and it will be fairly cheap as refineries tend to be built in oil country where there are the skills, experience, firms, equipment and geological characteristics to support carbon sequestration relatively inexpensively.

The rest of demand will mostly be met with green hydrogen, where carbon capture and sequestration are unnecessary.

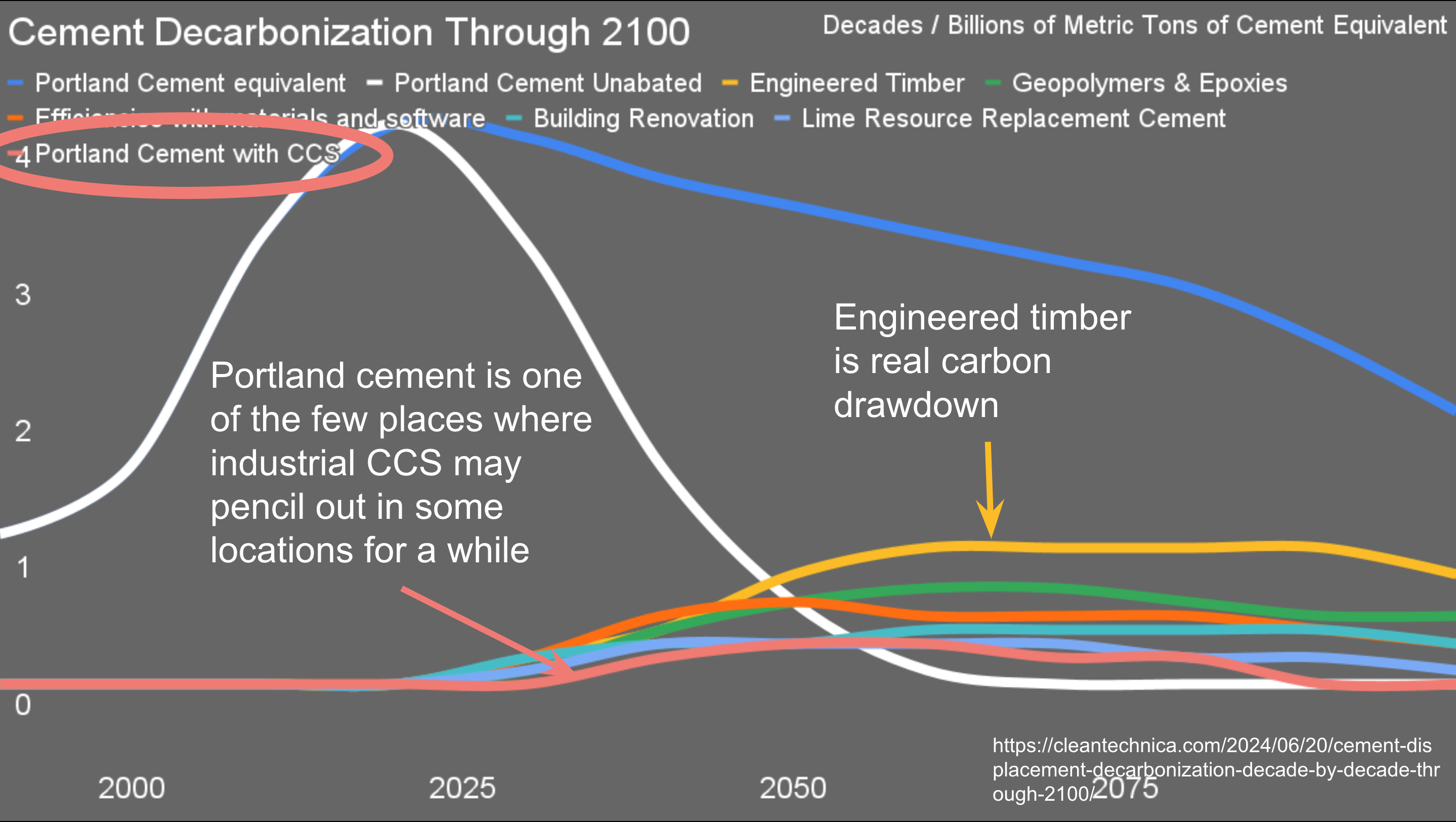

I recently completed my end-to-end assessment of cement, concrete, and all the purported solutions, as well as one of my usual projections of demand and winning solutions through 2100. For years I have been asserting that one of the few places where blue hydrogen and its related carbon capture and sequestration might pencil out is on cement plants.

The problem is process emissions from decomposing limestone into lime — the primary component of cement — and carbon dioxide, which historically has been a conveniently odorless invisible waste product that disposes of itself. We can electrify limestone kilns, but as long as we are turning limestone into lime, we’re going to get the CO2 as part of the bargain.

In my analysis, I worked out the cost-adders over basic unabated cement and concrete for various solutions. Where electrified heat or a solution like Sublime System’s electrochemical process is used to decompose limestone, capturing the fairly pure CO2 waste stream is much cheaper. Where cement plants coincidentally sit next to good geological sequestration sites, getting rid of the CO2 will be cheap. Where that combination can be made to work, it might be cheaper to do that than the alternatives.

But still, I only given CCS about half a century of relevance in the solution space.

One of the biggest levers for cement emissions, engineered timber, is very interesting in discussions of CCS. Engineering timber is basically plywood. It can be made into structural beams, walls and floors, replacing reinforced concrete. Because it’s stronger, one ton of engineered timber replaces 4.8 tons of reinforced concrete. Because it’s lighter, foundations require a lot less reinforced concrete.

It’s already much lower carbon emissions full lifecycle, from forest to building, than reinforced concrete, 200 to 300 kg of CO2e per ton of engineered timber. Those lifecycle emissions are from extraction, shipping and milling with fossil fuel powered machines and fossil fuel generation of electricity. Those emissions are going away.

And engineered timber is made of wood. Every ton of wood has breathed in about a ton of atmospheric carbon dioxide and breathed out all of the oxygen. The carbon is sequestered. Putting it into a building sequesters it for 60 to 100 years. When the building is demolished, useful beams and panels can be reused. The rest can have the carbon sequestered permanently through one of several approaches, from thermolysis to create biocrude and biochar which can be spread in forests to return nutrients, to sinking it in the ocean’s depths.

At present we harvest in the low billions of tons of timber, turning a lot of it into single use paper products and a lot of it into heat for various reasons. Diverting those wasteful options into much higher merit engineered timber means that enormous tons of it could be made available from existing forestry programs. And forestry can easily be sustainable.

The biological pathway for engineered timber has two carbon benefits. The first is that it avoids all of the CO2 from reinforced concrete, a big win. Because one ton of it displaces 4.8 tons of engineered concrete, that’s a lot of avoided CO2. But then it’s a sequestration pathway for atmospheric CO2 as well.

Trees grow themselves if we let them. So do plants. Nature naturally sequesters CO2. The glomalin protein on mycelium roots in undisturbed soil pushes carbon atoms effectively into long term soil capture. If we stop disturbing the soil, and we will through low-tillage farming and rewilding, the CO2 will be drawn down.

Enhancing natural carbon sinks is vastly cheaper and easier than carbon capture and sequestration. It just doesn’t benefit the fossil fuel industry. That’s why the nations pushing CCS hard are the ones that extract, process, refine, distribute and sell a lot of fossil fuels from their lands and underneath their waters.

Countries that don’t have big fossil fuel reserves — most of them — are sensibly following strategies focused on nature-based solutions. They won’t help us get to zero by 2050, but neither will mechanical or industrial CCS, and the nature-based solutions will address the problems of the next hundred years and the centuries after that.

Have a tip for CleanTechnica? Want to advertise? Want to suggest a guest for our CleanTech Talk podcast? Contact us here.

Latest CleanTechnica.TV Videos

CleanTechnica uses affiliate links. See our policy here.