Sign up for daily news updates from CleanTechnica on email. Or follow us on Google News!

This article is about critical thinking and bias. If you are interested in why people (all of us) often hold “incorrect” beliefs, read on. This subject is big and “hairy” in that there are many “forcings” that impact how we humans think, understand, and believe. This topic really deserves a book (or a few books), not just a short article. That said, this article does offer a decent snapshot of the issue, which, as you will soon see, is rather ironic, but I hope you find it useful — I sure did!

My training as a management consultant largely focused on understanding the “big picture,” so I am always disappointed in myself when I am drawn into minutia arguments. This happens regularly unfortunately since I am human and apparently not very smart. I’ve had quite a few conversations over the past year with some very smart people about climate change solutions that I just assumed we would all agree on — since the solutions were quite well accepted by the best research and experts in the field. Not so much. Some of these smart people had rather strong opinions that BEVs and renewable energy options, for example, would not even help society reduce C02 — as per the title of this article.

My reaction when things do not make sense is to “zoom out” to see the big picture and to study and research what is happening. I rely heavily on expertise, data, science, technology, engineering, and math as tools to help me understand the world. When people disagree with me on a point, I therefore try to find the best expertise, data, and STEM information I can find to make sure I am understanding things correctly and to understand the root of the issue.

Before I became a management consultant, I completed a degree in psychology, so I am also a big believer in meta-cognition (thinking about thinking). A key personal goal in my life is the same goal as science — to understand the world and to increase my body of knowledge. I love science since it cares nothing about mundane things like “winning” arguments. Science recognises that the only thing that matters is what is real. Opinion is plain silly in this context. I just assumed people with decent critical thinking skills would arrive at similar conclusions as me. I was wrong.

Critical Thinking

My research for this article quickly led me to John Cook, a senior research fellow at the Melbourne Centre for Behavior Change at the University of Melbourne. He obtained his PhD from the University of Melbourne, get this, by studying the cognitive psychology of climate science denial! A perfect start for this article.

Cook has been working in this field since 2007, has published several books, and has won several awards for advancing climate change knowledge. He is also the creator of the website Skeptical Science, and he wrote a book, a teacher’s guide, and a “gamified app” targeting kids and educators called Cranky Uncle vs. Climate Change to help educators teach critical thinking skills in class (it is quite fun).

In 2013, Cook also published a paper finding a 97% scientific consensus on human-caused climate warming, which has been broadly referenced, including by world leaders like President Obama and UK Prime Minister David Cameron. John clearly can be considered an expert in this exact subject matter.

In a nutshell, Cook found that the types of errors climate change deniers make fall into 5 categories — fake experts, logical fallacies, impossible expectations, cherry picking data, and conspiracy theories. Here is a summary of each category.

Fake Experts — When the world’s experts disagree with you, substitute a subject matter expert with anyone who “seems” credible. An example would be using a famous actor or someone who seems to be qualified on the surface, like a scientist with a PhD in another discipline, but one who lacks relevant knowledge or experience in the subject matter.

Logical Fallacies — This includes a broad set of logical errors, but they all basically lead one to jump to a conclusion that does not fit the initial premise.

Impossible Expectations — In this case, a person may suggest renewable energy options like wind and solar are bad since they still pollute due to mining and production. The expectation is that only perfect solutions (no pollution as opposed to less) will suffice.

Cherry Picking Data — When someone denying climate science finds the evidence to be against them, they can just cherry pick the data they want to communicate while ignoring data that goes against their narrative. Cherry picking can include a focus on old data or narrow data sets instead of considering the best science — or all relevant data (the big picture). The issue of climate change is a massive “hairy” issue that includes energy use, energy production, transportation, construction, food, population, sustainability, etc., and clearly all these things matter.

Conspiracy Theories — When the word’s experts do not support your position, just make stuff up or claim the world’s experts are on the take. Tip — Occam’s Razor is a great critical thinking tool to help dispel conspiracy theories. Occam’s Razor is an idea popularized by 14th century friar William of Ockham that suggests when you have two competing ideas to explain a phenomenon, the simplest one is most likely right.

Is critical thinking enough?

I found Cook’s research and the Cranky Uncle app to be fun as well as useful since not only does it help us to recognize the common traps in critical thinking people use to refute science, but it also helps us to self-monitor ourselves to make sure we do not fall for these same traps. I would recommend this app to anyone, including writers, educators, or anyone who wants to improve their own critical thinking and/or who wants to get better at countering anti-scientific narratives.

That said, I felt that there was still something missing in the formula for understanding and countering anti-scientific arguments. When I thought about the conversations I’ve had with people who were falling for these Cranky Uncle traps, it did not explain at all why these people were falling for them. These were often smart and well-educated people, after all! While some of these people were perhaps not strongly educated in science and math, all should have had reasonably good critical thinking skills. So, what was happening? Why would these smart, competent people be falling for these traps? Why would these people seemingly abandon their better judgement and instead reach into the Cranky Uncle toolbox of anti-science misinformation tricks?

Fortunately, this area has been well studied.

Motivation

The first thing to do is recognize that different people have different reasons to debate an issue, and their motivations may be much different from yours. It is important to understand what both parties want out of the debate to make sure it is even worth having.

- Learning — To share knowledge, exchange ideas, and understand the perspectives of others. You want to understand the world better. The focus on these conversations will be on experts, science, data, reason, engineering, and math. The conversation will usually be respectful, and it will never be tribal.

- Winning — To win the debate and/or to showcase/test your debating skills. These types of conversations are simply about beating others in a debate, and which side of the topic the person is on is not even particularly important. These conversations are usually respectful, but some debaters will use aggression if they feel this will help them win.

- Agenda — To either promote your values and beliefs and/or to challenge the values and beliefs of others — whether they be environmental, business, political, religious, philosophical, or something else. These conversations are always tribal, usually disrespectful, and critical thinking errors would be the norm.

- To throw darts — Some people (i.e., trolls) will try to bully others just to cause injury. They may or may not even have an obvious agenda behind it. Conversations will be disrespectful and error prone. According to Psychology Today, the best way to approach these conversions is to either ignore them or to find a way to laugh at their position. The wrong thing to do is to respond negatively, as this is what they want (this will only “feed” the troll).

I am sure there are other motivations that I have not covered here as well, plus anyone may of course have a combination of these motivations. Let’s now look at types of biases that affect critical thinking.

Cognitive Bias — What you want to believe

In addition to personal motivation, another reason that people fall into and/or use faulty critical thinking strategies has to do with cognitive bias.

We Think in Heuristics

Amos Tversky and Daniel Kahneman are both psychologists and authors who began their work in the 1970s in the area of decision making and cognitive bias. Their research included work demonstrating how our decisions are rarely purely rational. They found that people typically do not rely very much on expert opinion, logic, or data, but instead, make their decisions based on simple heuristics. Heuristics are mental shortcuts, usually based on very little information, that people use to make quick decisions that are good enough rather than fully optimized. They also noted that these simple heuristics inevitably introduce huge errors in our decision making since they are based on very little actual science, data, or knowledge.

This observation is important. Most of us know surprisingly little about what we are talking about.

Tversky and Kahneman also introduced the concept of “anchors.” The idea is based on observations how our current beliefs and understandings are resistant to change. The term “anchors” is most often used in the context of the first information a person happens to see (anchoring bias). For example, car salesmen will introduce a car’s list price as the starting point for a negotiation rather than introducing the price closer to what they would sell at. The reason is buyers use this high “anchor price” as their reference point, and a higher reference point makes the final selling price feel more palatable to the buyer. The research also observed how people are reluctant to change their existing anchor position even when they know their anchor is clearly wrong and even after being provided with better information. People will eventually move away from their anchor point, but it is a slow process, and they will not move far from it in one step.

The message here is people (all of us) know very little about anything, and once we form an opinion, we tend to hold onto it.

Confirmation Bias

Confirmation bias refers to a person’s tendency to only seek out information that agrees with their existing understanding. By only seeking information that supports our existing heuristics and anchors, we don’t have to change them. Changing our understanding takes work! I suspect confirmation bias is strongly related to, and helps reinforce, the heuristics and anchors described by Tversky and Kahneman.

Dunning Kruger Effect

David Dunning is a PhD social psychologist who worked at Cornell University and is now with the University of Michigan. He has published more than 80 peer-reviewed papers and is best known for his work on cognitive bias. Justin Kruger is a PhD psychologist and now professor at the New York University School of Business. Dunning and Kruger are best known for their 1999 study known as the Dunning Kruger Effect.

The Dunning Kruger Effect is a type of cognitive bias whereby people with limited knowledge tend to overestimate their knowledge and abilities on a subject, while people with excellent knowledge tend to underestimate their knowledge and abilities.

The reason scientists tend to underestimate their knowledge may have to do with how science works. Science aims to build a body of knowledge so what we think of as facts or truth today may change in the future as science gains knowledge. People who know a lot about a subject also appreciate how complicated, nuanced, and potentially uncertain it is and how much more there is to learn.

This bias affects all of us, so all we can do is be aware of it and try to be objective about it. Internalizing the “stages of understanding” model outlined below may help.

Stages of Understanding

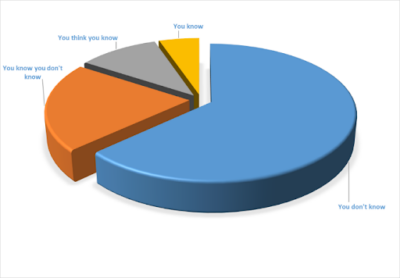

You may have come across the following model describing four levels of understanding and knowledge. I’m not sure who came up with this model, but the ideas in it date back to Socrates, and there are many variations of this model in use today. Here is the model as applied to understanding climate science.

- You don’t know — You have not read or heard much about the science behind climate change. Since most people have only heard or read non-expert material about climate change, this is the stage most people in society are at.

- You know you don’t know — You have gained enough knowledge about climate science by reading or listening to expert scientists working in the field. At this stage you begin to realize how little you know.

- You think you know — You continue to gain more knowledge in climate science by reading and perhaps by taking university level science courses, but your knowledge is incomplete. At this level you have enough knowledge to speak competently about climate change, but you are at a very high risk of getting things wrong.

- You know — You become an expert in climate science. You now understand the limits of what you know. You know what you know, and you know what you do not know. This stage is the level of expert scientists doing research in climate change. Please note, a PhD scientist who is not actively doing research in climate science would not be at this “expert” level.

Challenge — Test yourself on the following examples to see what you really understand. Describe in detail how C02 causes the earth to warm. Want something easier? Explain in detail how a zipper works. Now, fact check yourself. How did you do?

Emotional Bias — What you need to believe

Emotional bias is a well recognized factor affecting decision making. It refers to how our emotions, wants, and fears influence what we believe. As author Upton Sinclair said, “It is difficult to get a man to understand something when his salary depends on his not understanding it.”

Emotional bias is affected not only by your job sector, but by your friends, family, community, lifestyle, and things like the fear of the unknown.

If your friends, or perhaps your church, are all against the science of climate change, you risk being mocked, outcast, or worse from your group if you take a position against them. Standing out from the herd is dangerous for any species, and people know this instinctively!

Emotions & Decision Making

It is tempting to assume that emotion is always a barrier to good decision making, but research shows it is not that simple. Emotion, in fact, may be necessary for good decision making!

Antonio Damasio is a well respected and acclaimed neuroscientist and author working out of the University of Southern California. His main body of research focuses on neurobiology, specifically the neural systems which underlie emotion and decision making. One of the key things Damasio found was that emotion and decision making were linked, and that emotion is necessary for decision-making. His most famous quote is “We are not thinking machines that feel, but rather we are feeling machines that think.” His research found that when we lack emotion, as certain brain-damaged patients do, we become incapable of making decisions.

This does make sense since decisions are often very complicated, and we often must make decisions based on incomplete information. Making decisions requires us to weigh the pros and cons of a topic and to integrate many pieces of information. It also may require us to assess how the decision would impact our internal values and understanding as well as those of our social groups, etc.

In this context, perhaps emotion is the “tool” our brains use to integrate the many variables that go into making decisions. In any case, emotion appears to be a necessary component of thinking, including critical thinking. The difference between good decision making and bad may simply be a function of the quality and amount of information the person has ingested plus how emotionally comfortable the person is with the truth.

Foundational and Compartmentalized Beliefs

This is perhaps the most interesting and important factor in how humans think. Foundational beliefs in this context refer to how some of our beliefs and understandings are influenced by more deeply held beliefs and understandings. These beliefs can form the roots of understanding for many other things we believe.

A great example would be how a person may have a foundational belief that people, especially those with power and influence, are “bad” and are “out to get them.” In this case, the person may have a foundational belief that only friends or people they have developed trusting relationships with can be relied upon and trusted. If a person has this type of foundational belief, they probably will not accept the best expertise or science in any subject matter area.

Other foundational beliefs may include a belief in fairness, a belief in self-interest over the public good … or the opposite, a belief in the public good over self interest, a belief in religion, etc. It is these types of foundational heuristics that may be at the root of why people disagree on everything from climate change to politics!

Everyone will have some foundational beliefs like this, but all of us also appear to compartmentalize some of our beliefs and understandings. A great example is how a person may strongly believe that the world needs to act on climate change, while building a monster house or taking numerous long-distance vacations every year. A belief in sustainability clearly implies one to follow a sustainable lifestyle, after all, yet many of us compartmentalize these opposing behaviors. When beliefs and behavior are not aligned or when foundational beliefs conflict with one another, it can cause uncomfortable cognitive dissonance — so one solution is to compartmentalize these things. I struggle with this one myself, and I think most of us do. What kind of lifestyle is reasonable and sustainable? … I’ll leave that question for another article.

Who is most affected by cognitive and emotional bias?

Perhaps the biggest lesson all of us need to internalize is that we are all idiots. All of us are affected by cognitive and emotional bias. I’m obviously saying this in a tongue and cheek way, but it isn’t far off. Consider how the following very smart, well-trained people were profoundly wrong about product developments, often in their own field, because of their cognitive biases.

- Telephones — 1876: “The Americans may have need of the telephone, but we (British) do not. We have plenty of messenger boys.” William Preece, Chief Engineer, British Post Office. Global telecommunications value in 2019 grew to be worth $2.3 trillion.

- Alternating current power — 1889: “Fooling around with alternating current (AC) is just a waste of time. Nobody will use it.” Thomas Edison. The value of the top 10 (primarily AC) electric utilities in the US was worth $1.14 trillion in 2019.

- Cars — 1903: “The horse is here to stay but the automobile is only a novelty — a fad.” President of the Michigan Savings Bank advising Henry Ford’s lawyer, Horace Rackham, not to invest in the Ford Motor Company. There were 9.2 million horses and 4.6 million people involved in the horse industry at the time.

- Computers — 1943: “I think there is a world market for maybe five computers.” Thomas Watson President of IBM. There were 2 billion PCs in the world in 2015.

- Cell Phones — AT&T in 1985 commissioned a report to determine “How many cell phones will there be in 2020?” The well-regarded consultancy firm McKinsey & Co provided their answer — “900,000.” There were 4.77 billion mobile phone users in 2017.

- Apple iPhone — September 2006: “Everyone’s always asking me when Apple will come out with a cell phone. My answer is, ‘probably never.’” David Pogue, technology writer, New York Times. Apple released their first iPhone just 9 months later in June 2007.

- iPhone Market Share — 2007: “There’s no chance that the iPhone is going to get any significant market share.” Steve Ballmer, Microsoft CEO. Apple has been in the top 5 since 2009 with 19.2% market share.

The lesson here is simple. All the people in the above examples were competent, were even very smart, and were often top experts in their field, and yet all were unable to see past their biases. If these experts have trouble with bias, then clearly all people do — including you and me!

The Scientific Method

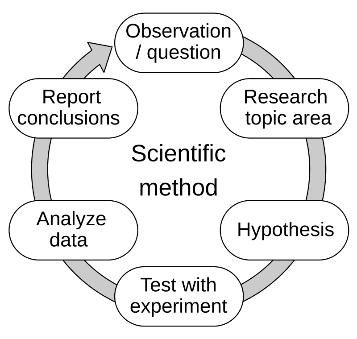

Philosophers and scientists have long recognized that cognitive bias is not at all helpful in the quest to understand the world. Exploration into best practices in science to reduce cognitive bias therefore began centuries ago. The roots of the scientific method in fact date back to the 16th or 17th century (or arguably even earlier), and their purpose was to address scientific bias and error. The term “scientific method” become recognized in the 19th century.

scientific bias and error. The term “scientific method” become recognized in the 19th century.

The scientific method is a strategy that helps scientists to isolate their cognitive biases. It forces them to stand beside their biases and to be objective. The process is shown in the diagram, but it also includes additional measures, such as peer review, transparency, and replication.

In short, the scientific method recognizes bias is a problem and addresses it by trying to control for bias so it can not interfere with the experiment. Good science tries to control bias since the goal of good science is to understand the world. Science does not even have hard truths for this reason; it only suggests “this is our current best understanding” for any given topic.

Summary

- Smart people often hold different opinions from each other even when they have access to the same information.

- It helps to understand our own motivations and the motivations of others before we start any debate to make sure our goals for the conversation are compatible.

- While critical thinking skills are very important, it does not appear to be enough since we all tend to lose focus on critical thinking when it does not fit what we want or need to believe.

- The roots of these critical thinking errors are cognitive and emotional biases.

- Cognitive biases stem from how we all tend to have only a very shallow understanding of any subject matter, how we tend to think we know more than we really do, and how we do not even seek out valid information if it runs contrary to our biases. All of us also tend to hold onto our opinions even when faced with better information.

- Emotional biases refer to how our desires and fears influence our understanding. When we emotionally “need” to believe something, we tend to. Everyone is susceptible to this.

- Differences in foundational beliefs and values may be at the root of why people disagree on subjects from climate change, BEVs, and even politics. If so, our conversations should be focused on finding common ground in our foundations and/or on challenging our foundational heuristics.

- If our goal is to understand the world better, we need to build our knowledge from solid foundations, we need to be able to emotionally “handle the truth,” and we need to have good critical thinking skills. We need to think like scientists. Scientists use strategies to reduce cognitive and emotional bias by becoming experts in their field and by employing the scientific method.

Recommendations

- Try to understand your own motivations and biases as well as those of the person you are talking to. Ask yourself/them why they believe what they believe. Ask what would change your/their minds. Consider if you/they have an emotional attachment to this position due to a job, peer group, lifestyle, etc. Your first goal is to figure out whether a conversation is worth having at all.

- Start by understanding root causes by asking questions that are “foundational” as opposed to focusing on details. If the person only values the opinions of people they know and trust vs. experts, the root issue that needs to be addressed is about trust in people and the value of expertise. Likewise, if the person emotionally needs to believe climate change is a hoax, perhaps since they work for an oil company, the root issue is fear of losing a job or high pay. In this case, the focus of the conversation should really be on how the world is always changing, how skills are transferable, and how massive job opportunities are opening in emerging sectors. The world is always changing after all, and we all need to change with it.

- It is also important to remember that no one moves quickly or far from their current anchor position, even when presented with better information. Changing opinions and growing our understanding clearly happens, but it is a slow process for all of us. Effective debate requires us to engage each other at the level of our anchors if we want the conversation to be productive. Patience is key.

- Become adept at critical thinking yourself. The Cranky Uncle app is an excellent tool and will help you to recognize your own critical thinking errors as well as those of others. When you understand the nature of the error you can better deal with it. Pushback on critical thinking errors generally means there is an unresolved foundational, cognitive or emotional bias at play. When you see this happen, change the focus of the conversation and look for the deeper root cause of the bias.

- Understand that any time we or someone else takes a position on a topic that is not consistent with the best information and the thinking of most experts working in the field, the chances of us being wrong will be very high. This is especially true when the science is well understood and when most experts working in the field agree. Experts are not perfect, but they are better than non-experts.

By Luvhrtz

Chip in a few dollars a month to help support independent cleantech coverage that helps to accelerate the cleantech revolution!

Have a tip for CleanTechnica? Want to advertise? Want to suggest a guest for our CleanTech Talk podcast? Contact us here.

Sign up for our daily newsletter for 15 new cleantech stories a day. Or sign up for our weekly one if daily is too frequent.

CleanTechnica uses affiliate links. See our policy here.

CleanTechnica’s Comment Policy